You’ll NEVER GUESS How Conspiracy Theory is Being Used on YouTube!!!: Conspiracy Theory Signalling

Jul 12, 2021 —

By Cornelia Sheppard Dawson, Student Research Assistant on the Infodemic project

An anonymous search for the terms ‘truth’ or ‘conspiracy’ together with ‘coronavirus’ on YouTube returns hundreds of results. Videos, with titles such as ‘Truth and Fiction Coronavirus: Gregg Braden’, ‘THE REAL TRUTH ABOUT CORONAVIRUS by Dr. Steven Gundry’ and ‘Is the coronavirus a conspiracy?’ are recommended, many with millions of views. These videos are mostly preying on the uncertainty of the pandemic to draw in viewers, promising clarity and cohesion. However, they also employ the language of conspiracy theory, implying that they may provide the type of alternative narrative that many conspiracy theorists look for. The result is that the comments sections of these videos are full of conspiracy theories, from ‘COVID-19 is a bioweapon’ to ‘the Great Reset’. There have been plenty of videos going viral that hide conspiracy theory behind a legitimate media aesthetic, whether this be the pseudo-documentary style of ‘Plandemic’, or the high production values of Epoch Times. But in this blog post I want to consider a slightly different genre of video. A significant number of high performing “conspiracist” YouTube videos collected by our data methods team hide their lack of conspiracy theory behind misleading titles that bring conspiracy theorists into the comments sections.

For example, consider the video titled ‘Celebs PAID To Say They Have Coronavirus?!?’ (27 March, 2020) from the channel ‘Clevver News’. The title implies some kind of conspiratorial action: that elements of the COVID-19 narrative are being faked, and that this fakery is being orchestrated by a shadowy organisation that has something to gain from inciting fear about the coronavirus. The content of the video, however, does not constitute a conspiracy theory. The channel, which has 4.82 million subscribers, mainly reports on celebrity gossip and news, whereas conspiracy theorists generally regard the Hollywood elite with disdain. The video itself barely references the conspiracy which its title suggests. It doesn’t bring up evidence or primary material, or even mention who is spreading this rumour. Instead, it focuses on actors’ reactions to the rumour in a way that is in-keeping with the channel’s brand. The host, Sussan Mourad, ties up the video by saying, “Ok guys, so right here, right now, let’s vow to put a stop to the rumour that celebrities are using coronavirus for clout.” She is not entertaining the idea that the rumour is true; instead, it was used purely as clickbait. Therefore, the form, content and target audience of this video have nothing to do with a conspiracy theory. Only the title labels it this way.

This label is enough to bring conspiracy theorists to the video. The comments section is full of conspiracy theories, suggesting that because of their profession, it is feasible that actors would be paid to lie. Other doubts about the virus are expressed in the comments. One user asks whether anyone actually knows someone with the virus. Another user replies:

“I have a doctor friend whos trying to convince me but I still have a hard time… there’s something fishyyy.. corona may exist but this whole thing doesnt add up.. she’s working in corona section and no one has died so far! but the rates they give on the news says otherwise!”

Others agree, writing:

“exactly! I have yet to know anyone with it?! And all of us replying in yt, they got a billions of people replying on this thing and I have not read comment that said they had the fken thing.”

These are some of the more moderate examples, but show a general space of misinformation, disinformation, and doubt in mainstream social media.

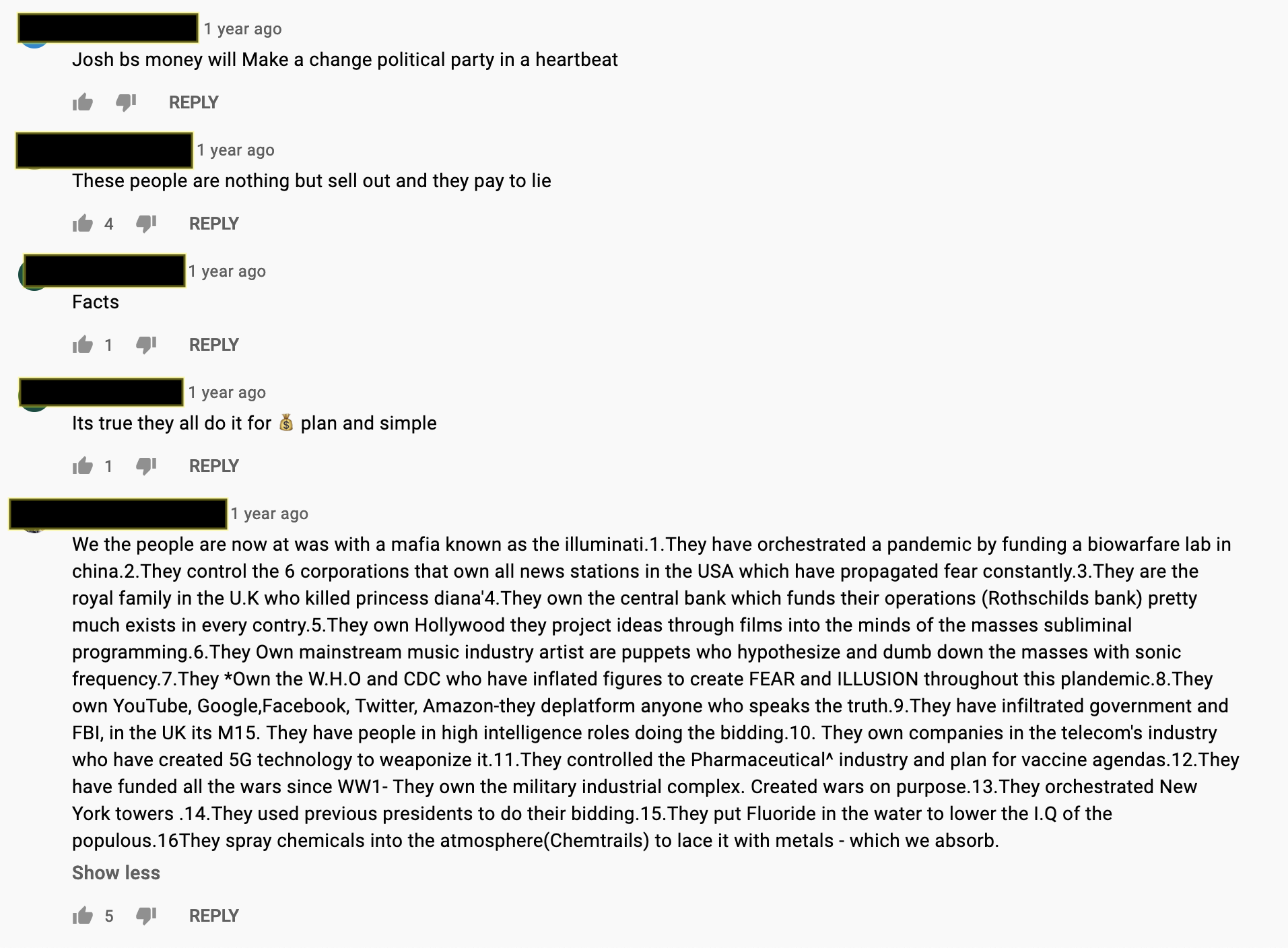

Fig 1.: Comments on the YouTube video ‘Celebs PAID To Say They Have Coronavirus?!?

The comments also include recommendations to watch conspiracy theorists like David Icke and Dr. Buttar, as well as links to less mainstream platforms like BitChute, meaning that not only is the comments section a place for conspiracy theory even when videos with actual conspiracist content may have been deplatformed, but a place for community interaction, and the promotion of conspiracist ideas. This is a trend across videos that signal to the conspiracy curious—conspiracy theory expands into the comments section, regardless of the content of the video itself.

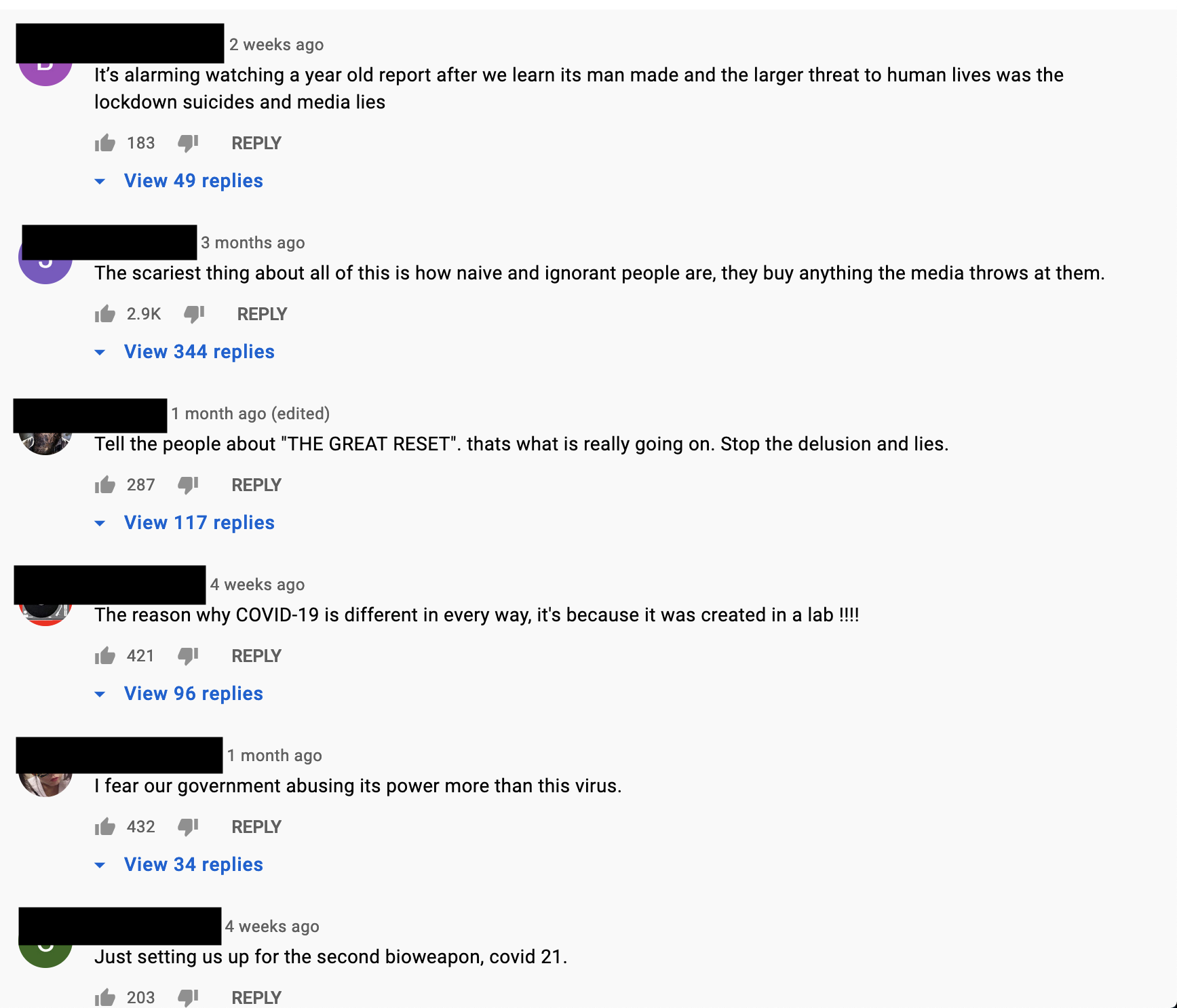

Fig 2.: Comments on the YouTube video ‘THE REAL TRUTH ABOUT CORONAVIRUS by Dr. Steven Gundry’.

There are generally two categories of comment. The first is characterised by short comments that are either vaguely conspiracist, or present doubt about the mainstream narrative. For the authors of this category of comments, it is more likely that they have been recommended these videos or have searched for them without having necessarily become “red-pilled”. The second category of comment is characterised by longer, more thought-out, detailed paragraphs, presenting overarching conspiracy theories in which COVID-19 plays only a small part. Contrasting the authors of the first category of comments, these users appear fully immersed and literate in the conspiracy theory world. The question one might want to ask of these conspiracy theory veterans is: Why engage with YouTube when so much of the primary content they should be interested in has been deplatformed?

To answer that question, it is important to understand the landscape that deplatforming has created. According to YouTube’s policies, a conspiracist video is removed if it “targets an individual or group with conspiracy theories that have been used to justify real-world violence,” but the judgment is not clear cut. In 2019, popular YouTuber Shane Dawson’s conspiracy theory video was de- and then replatformed fully monetised. The initial decision to remove the video was reversed because YouTube decided that Dawson was simply describing conspiracy theories rather than promoting them. This is the grey area within which such videos operate: they use the language of conspiracy theory to draw in viewers for maximum audience traffic, without being deplatformed.

Due to these grey areas, conspiracy theory can exist on YouTube beyond the content of the videos themselves. As Emillie de Keulenaar, Anthony Burton and Ivan Kisjes identify, it is not only videos that contain conspiracy theories that can be used by the conspiracy theorist community. They argue that some videos “are still consumed as conspiratorial sources. They may be nominally authoritative—some include news channels, others are TED Talks–but they are viewed by users as evidence for their conspiracy theories” (forthcoming). Even if these videos were to be deplatformed or demoted, the act of doing so would further justify the conspiracy theorist’s paranoia that evidence is being covered up. Left public, however, they enable a space for conspiracy theory to thrive unchecked. As de Keulenaar et al. conclude, “YouTube is less used as a platform for conspiracy making (i.e. discussing, amalgamating and sharing findings among co-subscribed conspiracy channels), and becomes a living archive of and for the perpetrators conspiracy theorists accuse.” This is a flaw in the YouTube moderation system, as conspiracy theory is not contained to easy-to-identify content that can be taken down. Instead, conspiracy theorists are flexible, and can use fact-checked material to support their beliefs. Researchers on the Infodemic team and their students have observed and analysed a similar phenomenon in Amazon book reviews. The reviews for books that perpetuate mainstream knowledge but which conspiracy theorists consider part of a plot, such as Klaus Schwab’s The Great Reset, can contain conspiracy theory. It would appear that no space is too trivial, too hegemonic, or too moderated to stop conspiracy theorists from posting. All online spaces seem worth occupying to the conspiracy theorist, even if the platform itself is trying to remove them. The reasons behind this are multiple.

Deplatforming, ironically, is one reason why conspiracy theorists may stay on YouTube. Though YouTube may no longer contain conspiracist analyses that can be found on more conspiracy-tolerant sites, de Keulenaar et al. explain that YouTube functions “precisely as a source of biased information and moderation policies, and thus evidence for their conspiracies.” This can be seen in the comments of the video “Is the coronavirus a conspiracy?” where one user writes, “I like how this is the only video I can find on YouTube in terms of covid conspiracies. And it just so happens to be debunking. Suspicious there aren’t any others available to see.” These videos are not watched in the hope that they have evaded the YouTube moderation function. It is because they have not been taken down that allows them to support the conspiracy theorists’ belief that the “real truth” is being censored. This method checks that their narrative is different to the hegemonic one. Rather than rant into the echo chamber of unmoderated, conspiracy-tolerant, fringe platforms, conspiracist users seek out YouTube to argue against consensus reality. They seek the conversation, the debate, to secure their oppositional placement. Being deplatformed affirms a video’s radical credentials; checking in with the content that remains on the platform might reaffirm a conspiracy theorist’s own counter-consensual status.

Many of the comments also appear hopeful: that they will find like-minded people and convince others of their theories. On the ‘Is the coronavirus a conspiracy?’ video, a user writes that “the number of THUMBS DOWN is proof that people are waking up. This gives me hope!”. It is a moment for communication and bonding without the effort of joining a closed group or chat. Additionally, sharing the ‘truth’ is a duty, and the recommendations to conspiracist figures and websites show that the comments section of YouTube can be an opportunity to bring in new adherents. The channels I have analysed do not contain conspiracy theory content, yet it’s clear that conspiracy theory is being allowed to flourish in the comments section of YouTube. Conspiracy theories therefore reach a wider audience. Indeed, popular channels, such as ‘Clevver News’, have viewing figures that BitChute videos rarely achieve. Employing conspiracy theory signalling may be advantageous to the channel searching for clicks but allows conspiracy theories to be effectively replatformed on YouTube. It means that those users simply seeking out celebrity gossip can encounter conspiracy theories they may not have bargained for. Though not inherently dangerous, this poses a problem for social media platforms that claim to be committed to stopping the spread of misinformation. When conspiracy theory is not bound to a particular space on a platform, it becomes more difficult to remove. Today, conspiracy theory has the ability to spread far from its die-hard believers into even the most unexpected corners of the Internet. This is a new challenge during the pandemic, when the spread of conspiracy theories has the potential to endanger lives.

References

Emillie de Keulenaar, Anthony Burton and Ivan Kisjes. (Forthcoming) “Deplatforming, demotion and folk theories of persecution by Big Tech.”

This post is published under the terms of the Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) licence.